Factfulness is fictional

How much of the economics of climate change is just confirmation bias?

Climate change is real and a challenge. Economists widely agree that a price on carbon is the most efficient way to meet that challenge. But what is an adequate price?

Given how central this price is to the question of climate change policy, it should give us pause that Canadian Environment Minister Steven Guilbeault recently announced that the price on carbon should now be about $247, nearly 5x of the old estimate of $54. Or when Biden’s EPA proposes a value of $190, while Trump’s administration was estimating the relevant number to be between $3 and $5 per ton.

To justify the introduction of such a significant change in pricing, you would probably expected that a bunch of scientists finally came up with an objective way of measuring precisely what until now could only be guessed. Like when we finally were able to measure the mass of some elemenatary particle or the speed of light. Did anything like that happen? Let’s have a look.

Prices on Carbon

A price on carbon internalizes the costs for products and services with respect to their carbon emissions. This should lead producers to look for low CO2 inputs or alternative product lines, and — all else being equal — consumers to choose lower CO2 products; market forces can so do a lot of the heavy lifting for decarbonization. Furthermore, a price on carbon makes it easy to calculate the benefit of any policy with regards to carbon emissions. Normally, prices emerge via market forces. They convey a lot of information about capital, relative scarity and so on. The price of carbon on the other hand is a political price. How do you set that price accurately?

Even the theoretical answer isn’t quite straight forward and there are at least these different options:

Option 1: Use the social cost of carbon.

Option 2: Use “target-consistent pricing”.

Option 3: Use Carbon Takeback Obligations.

Option 4: Set an arbitrary price.

(Carbon Coins are an example of this)

The social cost of carbon (SCC) is an estimate of the cost, in dollars, of the damage done by each additional ton of carbon emissions. To get to these estimates, “climate scientists and economists create models to predict what will happen to a range of indicators when new carbon dioxide is put into the atmosphere. Among these indicators are health outcomes, agricultural production, and property values. An extra ton of carbon emissions shortens lifespans, hurts crops, and causes sea levels to rise, decreasing property values.” (Source)

Target-consistent pricing works by setting a goal, presumably, because the desirability of that goal has been established satisfactorily —for example reducing emissions of carbon dioxide by 45% by 2030—and then estimating how much it would cost per ton of carbon in each year to enforce the desired reduction.

Carbon Takeback Obligations work by mandating producers of fossil fuels to verifiably put a yearly increasing percentage of CO2 generated from using their sold products into geological storage. The cost of doing so imposes a price. Interestingly enough, this price is predominantly determined by the technology of the competing fossil fuel companies and not by external sources. Short of forming a cartel inlcuding all carbon-removal companies, competition should incentivize these companies to apply their true prices.

Given how desirable and important a price on carbon would be, why don’t we just use the correct price and be done with it? Well, the trouble is that you cannot measure the price of carbon in the future. You have to estimate it.

Let’s look at some of the problems with that.

Climate Sensitivity

Climate sensitivity is one of the most important parameters, representing the relationship between an increase of CO2 in the atmosphere and the corresponding change in temperature. It is typically given as K of warming/doubling of CO2. Climate sensitivity is so important, because the damage caused by CO2 is mainly not a direct effect of CO2 (except for oceanic acidification), but stems from the increase in temperature it produces. Far higher temperatures are expected to cause changes in precipitation, rising of the sea level, changes in droughts, floodings, storms, wildfires etc.

Fortunately for humanity, it seems like the relationship is logarithmic, meaning that each absolute increase in CO2 has a diminishing absolute effect on temperature.

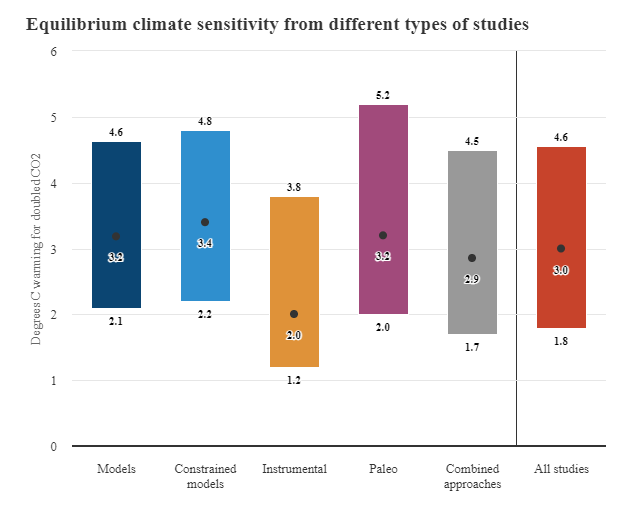

So what is the climate sensitivity? We only know its error range, which is additionally different for different lines of evidence:

While the numerical range of uncertainty has been remarkably stable in the +1.5 to +4.5 K/doubling range since at least 1979, it has been claimed that we have learned vastly more about the effects of CO2 and earlier estimates underreported uncertainty, while giving the same range pretty much by chance. Svante Arrhenius estimated in 1896 an increase of 5–6°C per doubling of CO2, but neglected various factors of the climate system that are included today.

It is unfortnuate that we are not able to pin it down better, because you can argue that sensitivities on the lower end of the estimates, cosistent with instrumental evidence, are low enough to cause the SCC estimate in a standard model to drop to nearly zero, while higher estimates, consistent with paleo evidence, give rise to far higher temperature estimates and thus to far higher costs of carbon.

But how exactly does an increase in temperature map to costs? This is done via a damage function.

Damage Functions

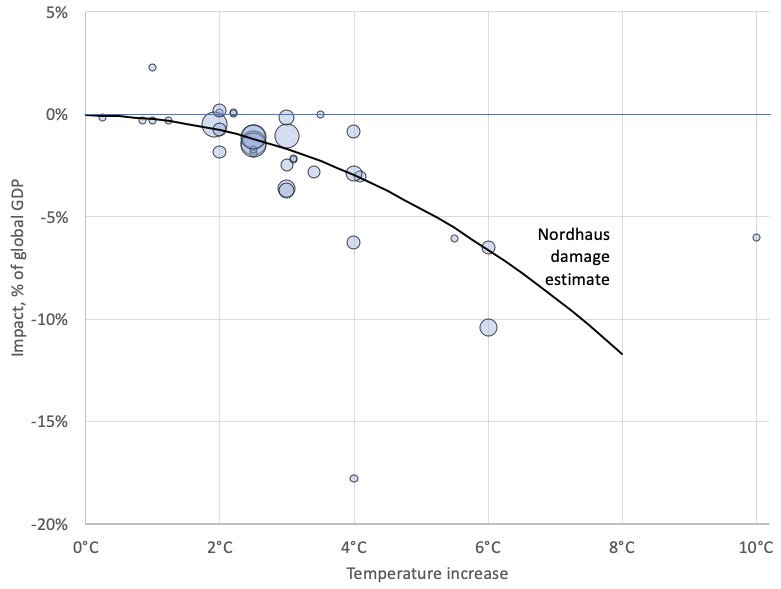

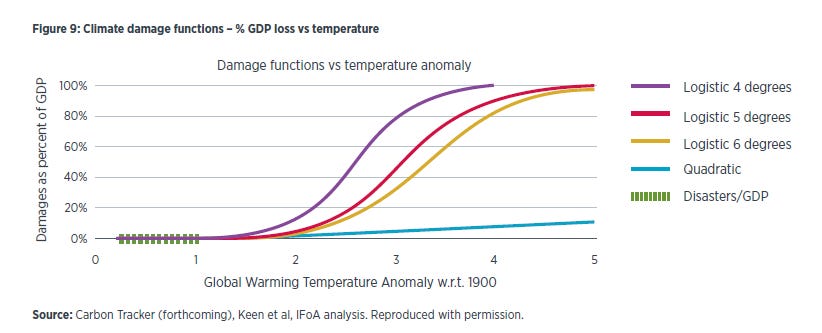

There is a wide-ranging debate about what the damage function is supposed to look like. Nordhaus won a Nobel price in part for his work on the shape of the damage function, and a lot of subsequently published research has given support for the general type of damage function and the general range of damages of his estimate, which are a few percentage points of lost GDP at a temperature increase that is predicted to happen by 2100. The “best guess” is something like -2.1% GDP @ 3K temperature increase.

But of course, there are other assumptions for the type of damage function. For some of these, an increase in global average temperature of 3°C would mean the complete annihilation of the economy and probably most, if not all, of humanity.

Why do these estimates diverge so sharply? One part of the answer to this lays in the assumption of the respective researches about the dynamics of the climate system. A point of great contention is the exsitence and the effects of climate tipping points. Another part of the answer lays in the assumptions about the future.

“Tipping point” is the name for the concept of self-reinforcing mechanisms in the climate system that will, once a threshold temperature has been crossed, lead to “runaway” warming. Common examples are the Arctic permafrost thaw, where the idea is that the thawing will release huge amounts of trapped methane, which acts as greenhouse gas and will increase the temperature, which accelerates the thaw and releases even more carbon, or forest fires in Canada that are emitting huge amounts of carbon, where the warming temperatures caused by these emissions promote more fires, which release carbon into the atmosphere and so on.

Fortunately for us: ”For the set of likely future scenarios we face today, the climate science literature has not identified any approaching global tipping point after which runaway climate change intensifies beyond humanity’s ability to arrest it. While tipping elements of the climate system—like Arctic permafrost thaw and loss of Amazon forest area—do influence total warming, the magnitude of this influence is substantially smaller than the societal factors that will ultimately determine the planet’s climate trajectory.” (Source)

But, what if? What if runaway warming is a high-impact, low-probability fat tail risk? Or what if climate change leads to changes in rain, that start a war for water that ends in global nuclear war? Those question are what keeps the debate about the damage function going.

We simply lack the knowledge about the future to rule all these scenarios in or out with certainty.

What seems less controversial today is that the idea of “baked-in” warming , i.e. warming in the future as a result of past emissions, regardless of human action today, seems to be false. Apparently, it has been known for quite some time that this is an artifact of older, coarser models and the conflation of constant CO2 concentration and net-zero emissions.

For what its worth, the good news is that it looks like humanity’s hands are on the thermostat. We are the masters of how much carbon we emit and we are responsible for the resulting warming.

Speaking of that, what assumptions do we make about the future, the other part of the disagreement about the shape of the damage function, to assess the damages?

Visions for the future

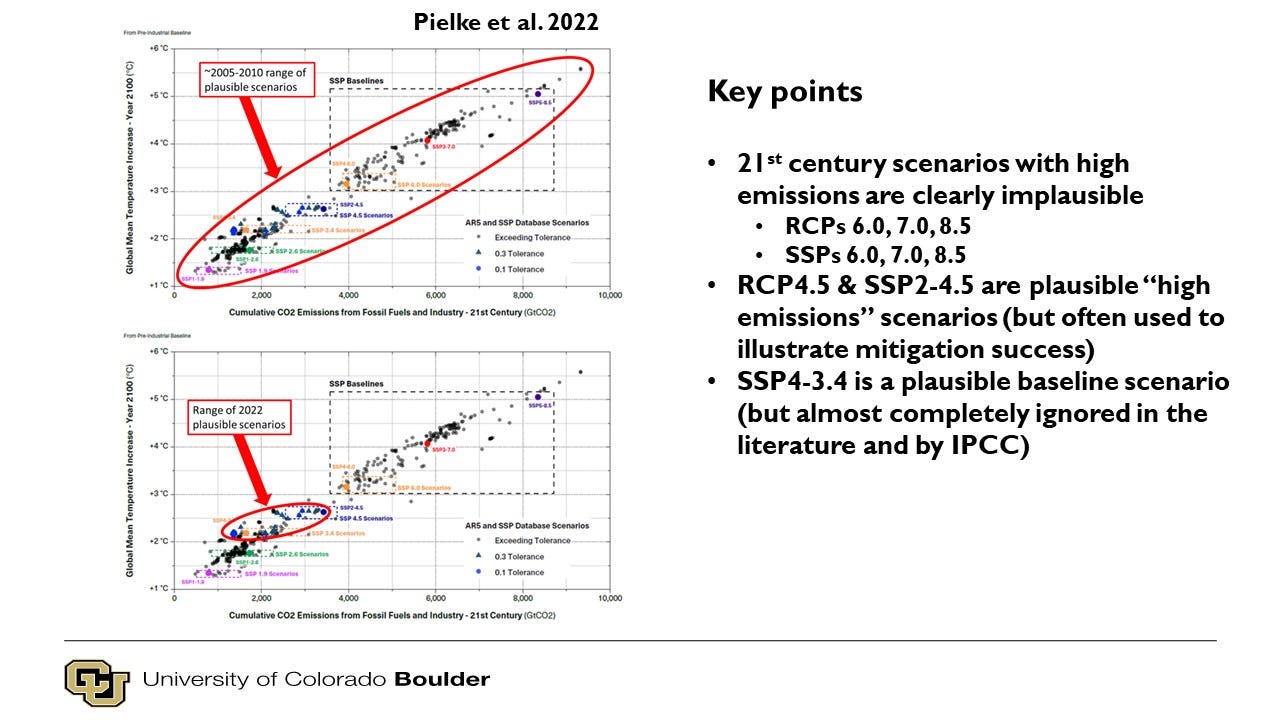

Climate scientists are using reference scenarios to “gauge” their models and make research more comparable. For example the widely used Shared Socioeconomic Pathways (SSPs) provide narratives for different, at least plausible socio-economic developments. These scenarios try to provide a qualitative, logically coherent story in terms of quantitative elements like population growth, economic growth, technological change, land use change, and so on.

Representative Concentration Pathways (RCP) discard the necessity for a coherent narrative and simply pose different scenarios for the radiative forcings in the future. This cuts “the link between the socioeconomic characteristics underlying the scenarios (population change, economic growth, and so on), the emissions scenarios they provided for climate models, and the climate futures those models would predict. The effect of the separation was to save time while abandoning any commitment to evaluating the scenarios and pathways for plausibility or probability.”

This allows modelers to investigate hypothetical worlds of extremely high emissions, but of extremely low development and climate resilience.

The trouble is: a lot of the researchers are using long-outdated scenarios:

The most commonly-used scenarios in climate research have already diverged significantly from the real world, and that divergence is going to only get larger in coming decades. […] Evidence is now undeniable that the basis for a significant amount of research has become untethered from the real world. The issue now is what to do about it. [Our] literature review found almost 17,000 peer-reviewed articles that use the now-outdated highest emissions scenario. - THB

Using a scenario with implausibly high emissions introduces also high-end effects of warming (storms, sea level rise, etc.) that afflict modeled populations and thus the calcualted climate damages. The usage of plausible scenarios should therefore be a necessary, but not sufficient condition for any study, for it to be taken into consideration for concrete policies.

The cost of carbon is further dependent on how technologies are used in the future. Will we build dams to reduce the costs of flooding? Will we refrain from building in wildfire or flooding zones? Will we use drought- or salt-resistant crops? Will we build cold shelters? Early warning systems? Storm-proof buildings? Will we improve our wildfire managment strategies? All of the assumed/modeled answers to these questions will have an influence on the estimated climate damages.

This is especially visible in the decision about what areas to include in the damage assessment. The Trump administration only looked at damages in the USA, a high-income, high-technology country, with reasonably good governance, stable institutions, vast, fertile lands for agriculture, and the geopolitical safetey of ocean-sized moats and world’s most powerful military, to get to their SCC of $3-$5.

Not all countries are that well situated. Looking at the whole world muliplied that SCC by more than tenfold to $51. Richard Tol, serving as a Convening Lead Author on the IPCC AR5 Working Group III, assessed “that many of the more dramatic impacts of climate change are really symptoms of mismanagement and poverty and can be controlled if we had better governance and more development.” (Source)

The highest uncertainty exists by its very nature with reagards to technologies yet to be invented or commericalized. It’s unclear how to account for the possibilities of cheap CCS technology, a nuclear renaissance for fast or thermal fission, fusion, advances in geothermal power, battery storage, solar radiation management or DAC. In fact, producing a reliable, scalable, carbon-free source of power, which is cheaper than coal without any subsidies, could alter our paths substantially. As would the emergence of AGI, or even narrow AI in relevant sectors.

Another major influence on the cost of carbon is the discount rate that is used. Discounting future damages with 0% will create substantially different results from a discount rate of 4%, or even 8%. The former puts the burden of paying for all of the future’s climate damages on today’s populations, higher percentages increasingly shift the burden to future generations, which will presumably be far richer and technologically more capable than today’s.

Social Cost of Carbon

To recapitulate the discussion about SCC: it depends on the assumed climate sensitivity of CO2 and other greenhouse gases (for example methane), on what assumptions you make about the climate damage function, on what you assume about the societies, economies and technologies from now until 2100 (and often far beyond,) and the arbitrary choice of a “suitable” discount rate.

Given all that, it shouldn’t be too surprising that all of the subjectivness and arbitrariness going into these estimates determines the results in a kind of policy- or preference-based fact-making.

Ross McKitrick recently noted that

[SCC estimates] are if-then statements. They are not intrinsically true or false: what matters is the credibility of the assumptions. If emissions follow the RCP8.5 scenario (which they won’t), and if people don’t adapt to climate change (which they will), and if CO2 and warm weather stop being good for plants (which is unlikely), then the SCC could be five times larger than previously thought. More likely it isn’t, and very well could be much smaller.

Richard Tol, an economist with deep experience in the social cost of carbon, also emphasizes the effect of the subjective and largely arbitrary assumptions on SCC estimates, which vary by orders of magnitude AND sign:

Published estimates range from -771tC [dollars per ton of carbon] to +216,035/tC.

Research cannot reduce the span of credible estimates by much, as the future is uncertain and ethical parameters are key.

Richard Pielke Jr. sums it up very nicely:

The SCC can be whatever you, I or President Biden wants it to be, and a very science-y justification can be produced in support of this, that or another estimate. Ultimately it is science theater for regulatory policy.

So if the social cost of carbon is a largely arbitrary metric that can be laundered at will to give support for preconceived policy preferences, target-consistent pricing is surely better, right? Just set a target and price carbon accodingly to reach it!

The 1.5°C Target

Well, where does our 1.5°C target come from? Is it hard science, derived from physically meaningful and well measured boundaries?

Nope, it’s an arbitrary communication device invented for political purposes (i.e. placating some island nations at the Paris Climate Summit in 2015).

However, the scientific community —rather than loudly proclaim that the aspirational targets were exactly that and practically unreachable — followed the political demand for scientific justification after the fact to legitimize the impossible goal as an actual target to guide policy. - TBH

The 1.5°C degree goal is realistically not achievable short of a complete collapse of China, India and subsequently almost all of the rest of the world economy.1

It should be well known by now, that there is no easy substitute for fossil fuels, which are naturally abundant, concentrated, storable, versatile and competitive; over 80% of the world’s energy consumption is supplied by it.

For some of the most important substances (cement, steel, fertilizers, plastics), we do not have mature CO2-free alternatives.

Nuclear power seems like a possible substitute, for some of its intrinsic properties (abundance, energy density, …), but it’s stymied by cost (probably to a large degree caused by regulation). Even with risk-appropriate regulations, the required speed of the build out to hit the promised goal is mind-boggling.

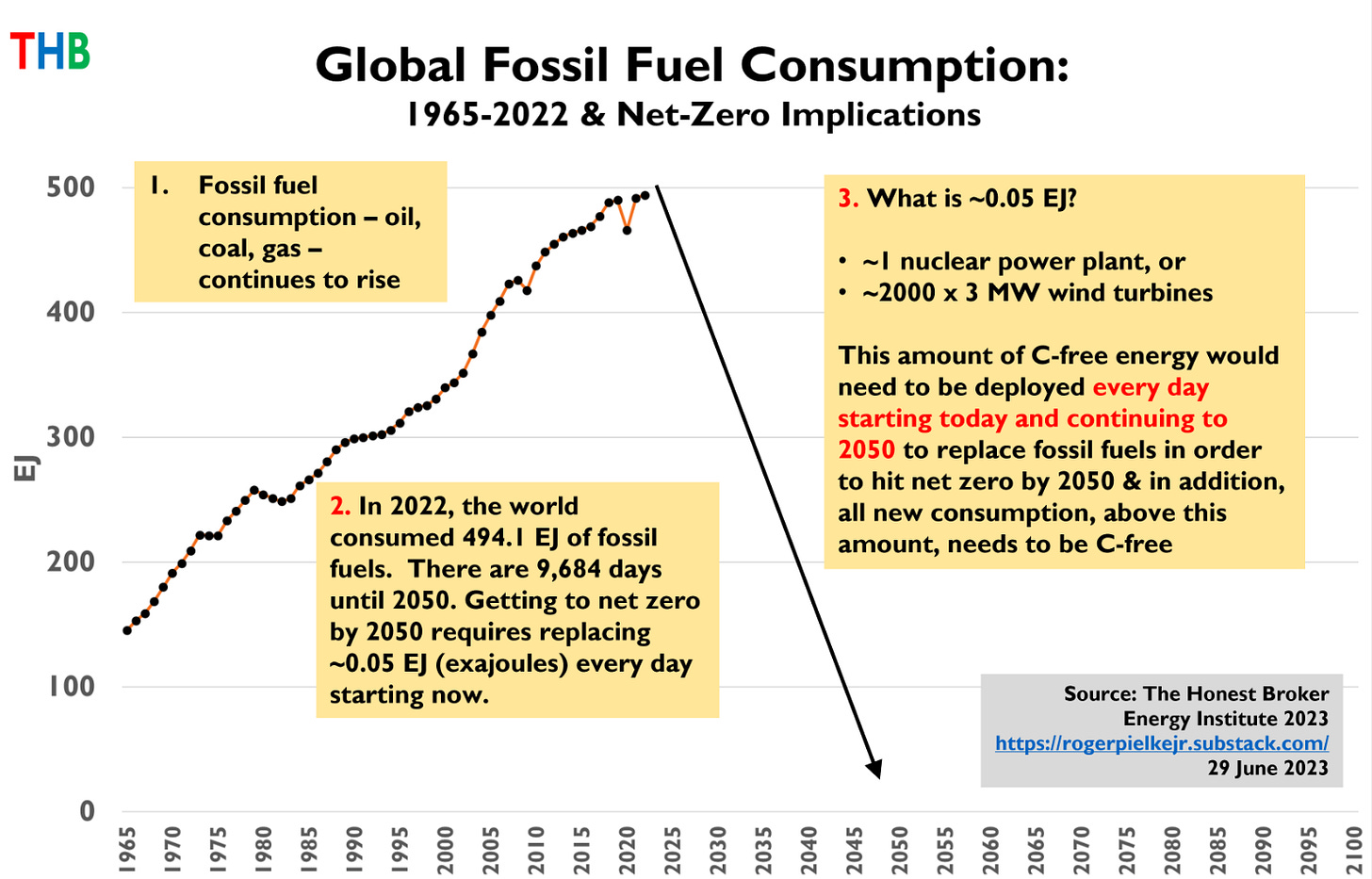

Hitting net-zero in 2050, assuming there isn’t a huge reduction in energy demand, poses gigantic challenges and costs: we would need to build a large nuclear power plant or 2000 3MW wind turbines every day from now until 2050.

But even that speed does not come close to reaching the 1.5°C goal. To have only a 66% chance of staying below 1.5°C warming, we would need to hit net-zero emissions by 2030, not by 2050. And it does not account for any growth in energy consumption, for example by the 3 billion or so people, who are using less energy than an American fridge.

Footing the bill

Consumers, voters and politicians seem quite adamantly unwilling to pay the price for reaching that goal via economic hardship or carbon prices at a target-consistent level.

This has spawned a whole industry advocating for non-state global governance. It’s an emergency, so fast, transfer all the power to your betters!

No need for democracy! Who would these betters be? Someone — intelligent, uncorruptible, honest, unbiased — to tell us what “The Science” says and all policies flowing from that must be accepted. Or else.

It seems like the scientists and heads of international bodies are “selflessly” applying for that role.

Given the shortcomings of centralized, non-market economic systems, which have been well studied2, and tested regrettably thoroughly, that does not seem like a promising way forward. Especially, because a noteworthy difference to previous incarnation of such economic systems is that these at least paid lip service to the idea of improving the economic lot of the population, while this incarnation vows to focus on the opposite3 .

It’s not too surprising, that this does not bode well with the intended “beneficiaries” of these policies.

In summary, the social cost of carbon and the target-consistent pricing generate arbitrary prices, because the assumptions/targets are injected into the pricing model arbitrarily.

While the theoretical economic rationale for the price is sophisticated and sound, their practical derivation fails: there are too many subjective assumptions and unknowns to produce an acceptable value for many stakeholders; different estimates are indicative of different values and preference. Politics, not science, is the appropriate place for discussing —and ultimately compromising on — the question of what ought to be.

There are different models for injecting science into the political process (like “honest brokering”), which I think are legitimate, but injecting politics into the scientific process and hiding policy-arguments behind science, comes at the cost of lost trust in this process and its institutions.

Roger Pielke Jr.’s pragmatic proposal to just impose a tax of a few $ per ton to generate enough revenue to fund some serious research into clean, affordable and reliable energy, becomes far more attractive: the amount is just as arbitrary, but it’s better politics. A similarly earmarked gas tax enjoys long-lasting bipartisan support.

I have to realize that carbon dividends, i.e. a carbon tax, border adjustment taxes and rebates paid out per captia, which I have been advocating for repeatedly, and still think to be feasible politically, will have to rely on an arbitrary value; the discussion about what it should be, has to be had in the political doamin.

Alternatively, Carbon Takeback Obligation could shift the question about “the right price” to a matter of true costs, but lack an easy reimbursement mechanism.

Does climate change pose a risk? Yes, absolutely.

Should we do something about it? You bet!

Could a price on carbon help with that? Certainly!

Should we do absolutely everything, without any regard to the (human) costst for it?

I don’t think so. We need to be smart about our reaction to climate change. We cannot sacrifice democractic liberalism, the integrity of science, and the present’s poor to the anxieties of an “anointed" Western elite about the future.

At least not with regards to anthropogenic emissions. Large volcanic eruptions, a cooling multidecadal cycle in the oceans, a Grand Solar Minimum or Solar Radiation Managment could help to keep the temperature below the “treshold”, but not for the intended reasons.

For example by Nobel laureate F.A. Hayek:

“Once wide coercive powers are given to government agencies…such powers cannot be effectively controlled.”

“The chief evil is unlimited government…nobody is qualified to wield unlimited power.”

“Economic control…is the control of the means for all our ends. And whoever has control of the means must also determine which ends are to be served.”

“There is no justification for the belief that, so long as power is conferred by democratic procedure, it cannot be arbitrary…it is not the source but the limitation of power which prevents it from being arbitrary.”

“The case for individual freedom rests largely upon the recognition of the inevitable and universal ignorance of all of us concerning a great many of the factors on which the achievements of our ends and welfare depend.”

“The argument for liberty is…an argument…against the use of coercion to prevent others from doing better.”

“The individualist…recognizes the limitations of the powers of individual reason and consequently advocates freedom.” - FEE

The objective of degrowth is to scale down the material and energy throughput of the global economy, focusing on high-income nations with high levels of per capita consumption. The idea is to achieve this objective by reducing waste and shrinking sectors of economic activity that are ecologically destructive and offer little if any social benefit […]. - THB